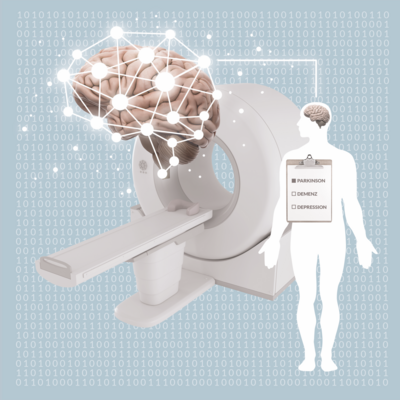

Medicine of the future: What framework is needed for AI?

Artificial intelligence is revolutionizing medicine. But doctors and developers also have to deal with the risks: The FRAIM project aims to find answers.

A guest article by Prof. Bert Heinrichs and Anna Geiger - Institute of Neuroscience and Medicine: Brain and Behavior (INM-7) at Forschungszentrum Jülich.

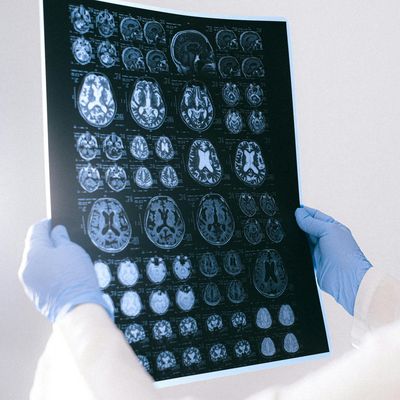

Artificial intelligence (AI) is no longer science fiction. Almost unnoticed, it has become part of our everyday lives in countless areas. For instance, we encounter applications that use AI in programs that optimize production processes at work or in the form of recommendation algorithms that streaming services use to suggest films to us. AI has also made its way into medicine. Worldwide, researchers are working on refining and accelerating personalized diagnoses and personalizing treatment recommendations. Many experts are even convinced that AI could soon bring about a genuine revolution in medicine. After all, with its large amounts of data and proximity to science, medicine is virtually predestined for the use of AI.

The use of AI in medicine also carries many risks. Who is responsible for misdiagnoses? And how secure is sensitive health data?

Prof. Bert Heinrichs

Prof. Bert Heinrichs, Forschungszentrum Jülich

Bert Heinrichs is professor of ethics and applied ethics at the Institute for Science and Ethics (IWE) at the University of Bonn and leader of the research group “Neuroethics and Ethics of AI” at the Institute of Neuroscience and Medicine: Brain and Behaviour (INM-7) at the Forschungszentrum Jülich. He studied philosophy, mathematics and education in Bonn and Grenoble. He received his MA in 2001, followed by a doctorate in 2007 and his habilitation in 2013. Prior to his current position, he was Head of the Scientific Department of the German Reference Center for Ethics in the Life Sciences (DRZE). He works on topics of neuroethics, ethics of AI, research ethics and medical ethics. He is also interested in questions of contemporary metaethics.

A few years ago, for example, an algorithm was presented that is intended to facilitate the diagnosis of skin cancer. An image of a suspicious skin change taken with a smartphone camera can be analyzed using an AI model. It turned out that the results were just as good as traditional diagnoses by specialists. However, the AI-based diagnosis was of course much faster and cheaper. More importantly, it could also be made available to people living in regions where only a few specialists are available and appointments are correspondingly rare.

Many unresolved questions

However, the use of AI in medicine also carries many risks. The example of skin cancer diagnosis makes this clear. An initial version of the system had errors that were due to markings on images in the training data. It also turned out that the system worked much more reliably for certain skin types than for others. Again, this was due to the training data - more specifically, the fact that certain groups were less well represented than others. And there are even more questions that arise here: Who is responsible for misdiagnosis, for example? And, of course, how secure is sensitive health data in such a system? Finally, who has access to it and what can it be used for?

Anna Geiger

Anna Geiger, Forschungszentrum Jülich

Anna Geiger studied neuropsychology at Maastricht University and has been working as a scientific coordinator at the Institute of Neuroscience and Medicine (INM-7; Brain and Behavior) at Forschungszentrum Jülich since 2018. Here, she acts as an interface between administration and science, takes on coordination and management tasks and is active in the field of science communication.

In order to ensure the responsible use of AI in medicine, these and other ethical, legal and social issues must be addressed in conjunction with technical development. Forschungszentrum Jülich's Institute of Neuroscience and Medicine has therefore launched the project "FRAIM. Beyond mere performance: an ethical framework for the use of AI in neuromedicine". It is an interdisciplinary joint project funded by the Federal Ministry of Education and Research (BMBF) and involves partners from the fields of neuroscience, psychology, ethics and law at the Universities of Düsseldorf and Bonn. Their common goal is to provide a framework for the evaluation of AI procedures used in medical diagnostics and decision-making.

Empirical studies on the acceptance of AI

Up to now, too little is known about the factors that lead doctors and patients to trust the use of AI in medicine. As part of FRAIM, the researchers are therefore conducting surveys, expert interviews and focus groups: What do those affected think about the use of AI in healthcare? What concerns do they have? What hopes do they have? What factors influence trust in AI technologies? In this way, they want to obtain a comprehensive picture of the views of all those affected by the use of AI in medicine. FRAIM will evaluate the results and publish them at the end of the project. Developers can then use these analyses to design their AI applications in such a way that they are accepted by society.

Ethics and law

In addition, the use of AI in medicine also raises numerous ethical and legal questions that should be taken into account at the development stage. Established concepts such as autonomy, responsibility, accountability and liability need to be reviewed to see whether they are still valid in light of the new technology or whether they may need to be revised: How does AI affect the autonomy of doctors and patients? What influence does it have on the doctor-patient relationship? To what extent can responsibility be delegated to AI tools? In order to clarify these questions, the FRAIM project is compiling an overview of the current ethical debate on medical AI and working on solutions to enable the responsible use of AI in medicine. FRAIM's legal experts are taking a close look at areas of law such as medical device law, the General Data Protection Regulation and medical professional law and are considering their implications: Do they need to be adapted and, if so, what could new regulations look like?

The FRAIM project is expected to run until 2025, and the end result should be more than just a series of individual findings that are of interest to various specialist communities. Instead, the results of the empirical, ethical and legal sub-projects will be compiled and related to each other. Thus, at the end of FRAIM, a theoretically sound and empirically validated framework for evaluating the use of AI-based procedures in medical diagnostics and decision-making will emerge. This work will not only be an important tool for researchers, developers and physicians, it will also support policy-makers and society in dealing with the new technologies. In this way, FRAIM aims to help ensure that the great opportunities that are undoubtedly associated with the use of AI in medicine can be used responsibly.